Humans are masters of self-reflection, we use our past memories of life experiences to create an imaginary version of ourselves which act out these actions before we do. We refer to this imaginary version of ourselves as a "self-model." While the creation of this self-model is second nature in humans, it is something that robots have been unable to learn themselves, instead relying on labor intensive hand crafted simulators which inevitably become outdated as the robot develops. While recent advances in robotics and deep reinforcement learning have allowed robots to make huge strides learning or planning entirely in simulation this reliance limits how far development can go by tying it to simulation while simultaneously making development slower and more expensive. We believe that in order to take the next step towards a more general AI that can successfully learn over its entire lifetime, adapting to new situations as they come, the creation of an accurate and reliable self-model.

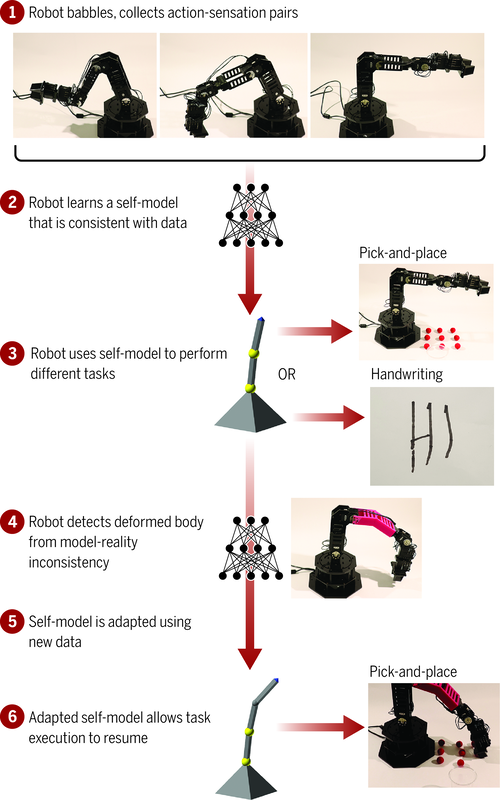

While there have been other attempts to create robot self-models, however in our work we make no assumptions about physics, robot geometry, or anything. Instead, we record a sequence of states and actions taken by the robot moving randomly, with the states being all types of information about the robot, ranging from sensor readings to joint positions, and the actions correspond to motor commands. We pass this information to our deep learning model so that it learns to predict the next state from the preceding states and actions. In this way we can use this self-model to allow the robot to "imagine" what will happen to its own body if it takes certain actions, given a specific starting state. This is similar to how a a baby moves randomly at first so that it can eventually create a comprehensive mental picture of the world and an idea of how its body relates to the world. Once we have created this initial self-model, any changes to the body can be immediately detected as predicted states will show a big divergence from reality. The robot can then restart the process of collecting data about itself in order to adapt its self-model to its new body shape.

Once the robot has formed a reliable self-model, it has the ability to use that self-model in the same way modern robots use simulators. The robot can plan in the self-model and then use the plan formulated in the self-model to perform tasks successfully in real life. We have shown that these self-models are informative enough to be able to perform a pick and place task on an articulated robotic arm successfully in closed or open loop. In closed loop our self-model had a divergence from reality less than that of a hand coded simulator.

While there have been other attempts to create robot self-models, however in our work we make no assumptions about physics, robot geometry, or anything. Instead, we record a sequence of states and actions taken by the robot moving randomly, with the states being all types of information about the robot, ranging from sensor readings to joint positions, and the actions correspond to motor commands. We pass this information to our deep learning model so that it learns to predict the next state from the preceding states and actions. In this way we can use this self-model to allow the robot to "imagine" what will happen to its own body if it takes certain actions, given a specific starting state. This is similar to how a a baby moves randomly at first so that it can eventually create a comprehensive mental picture of the world and an idea of how its body relates to the world. Once we have created this initial self-model, any changes to the body can be immediately detected as predicted states will show a big divergence from reality. The robot can then restart the process of collecting data about itself in order to adapt its self-model to its new body shape.

Once the robot has formed a reliable self-model, it has the ability to use that self-model in the same way modern robots use simulators. The robot can plan in the self-model and then use the plan formulated in the self-model to perform tasks successfully in real life. We have shown that these self-models are informative enough to be able to perform a pick and place task on an articulated robotic arm successfully in closed or open loop. In closed loop our self-model had a divergence from reality less than that of a hand coded simulator.

learn more |

R. Kwiatkowski, H. Lipson, Task-agnostic self-modeling machines. Sci. Robot. 4, eaau9354 (2019).

|

Project participants |

Robert Kwiatkowski and Hod Lipson

|

Related Publications |

|