BeyonD Categorical Label Representations for Image Classification

|

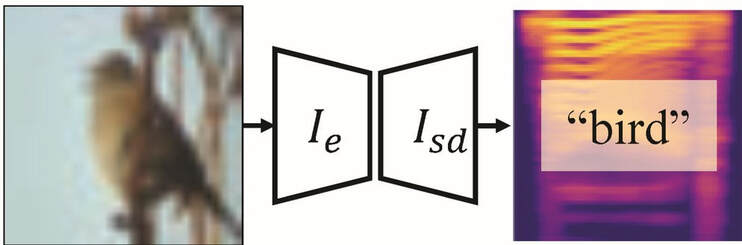

We find that the way we choose to represent data labels can have a profound effect on the quality of trained models. For example, training an image classifier to regress audio labels rather than traditional categorical probabilities produces a more reliable classification. This result is surprising, considering that audio labels are more complex than simpler numerical probabilities or text. We hypothesize that high dimensional, high entropy label representations are generally more useful because they provide a stronger error signal. We support this hypothesis with evidence from various label representations including constant matrices, spectrograms, shuffled spectrograms, Gaussian mixtures, and uniform random matrices of various dimensionalities. Our experiments reveal that high dimensional, high entropy labels achieve comparable accuracy to text (categorical) labels on the standard image classification task, but features learned through our label representations exhibit more robustness under various adversarial attacks and better effectiveness with a limited amount of training data. These results suggest that label representation may play a more important role than previously thought.

|

|

Predicted Sound Demos (Click to hear the sound!)

learn more |

Press Release: www.engineering.columbia.edu/press-releases/hod-lipson-deep-learning-networks-prefer-human-voice

Source code will be released at: github.com/BoyuanChen/label_representations |

Project participants |

Boyuan Chen, Yu Li, Sunand Raghupathi, Hod Lipson

|

Related Publications |

Chen et al, (2021) Beyond Categorical Label Representations for Image Classification, International Conference on Learning Representations (ICLR)

|